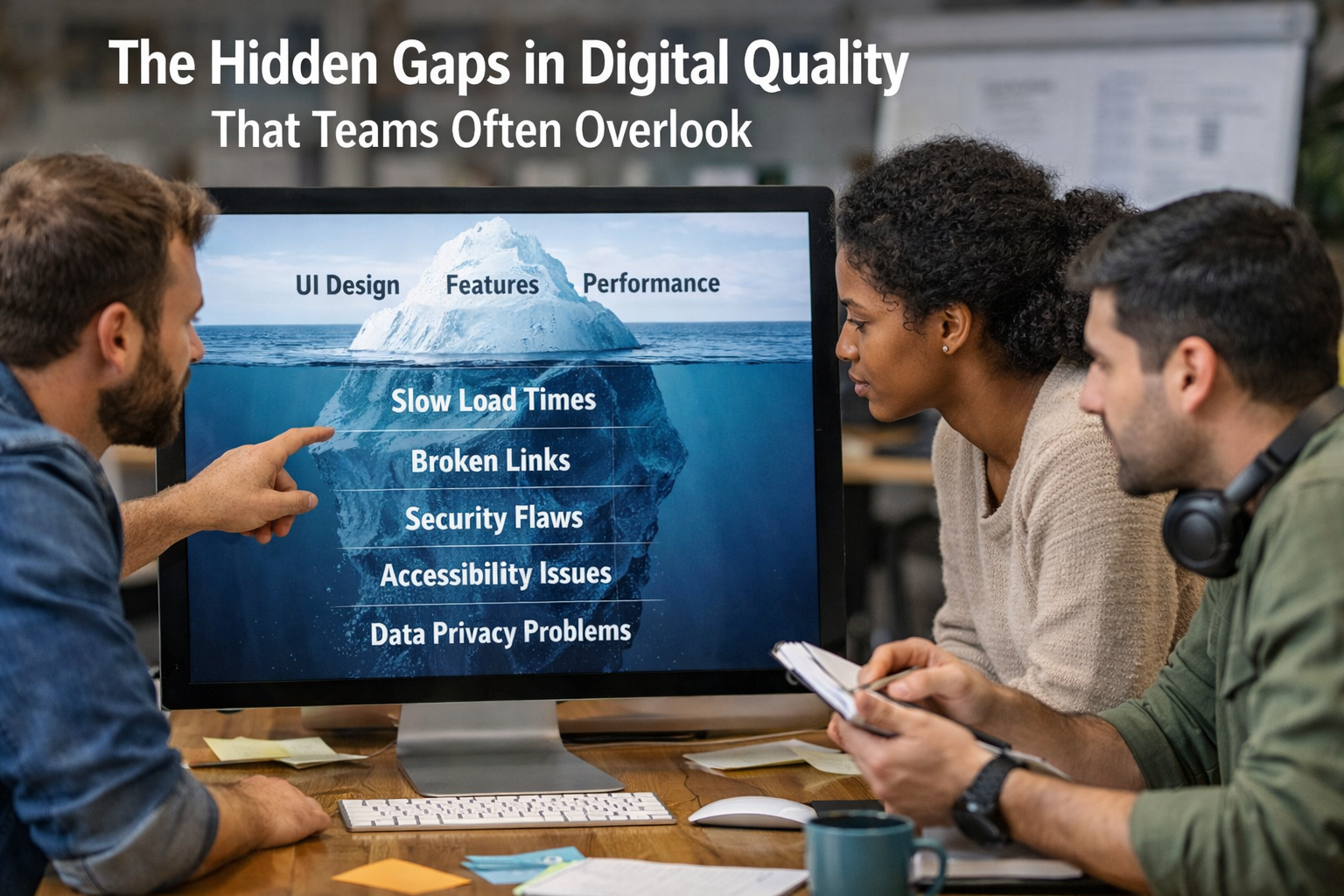

Digital quality issues rarely come from obvious failures. Most teams catch broken builds, failed test cases, and major crashes before release. The real damage is usually caused by smaller gaps that slip through because they are harder to see, harder to reproduce, or assumed to be edge cases.

These gaps often surface only after users are already affected. Complaints rise, engagement drops, and teams scramble to understand what went wrong. This is especially common in mobile app testing and cross browser testing, where variability is high and controlled test environments hide real-world behavior.

This article looks at the less visible quality gaps teams often overlook and explains why they matter.

Where Web and Cross-Browser Quality Commonly Breaks Down

Within web and cross-browser environments, several gaps show up repeatedly:

- Experience inconsistency despite functional success. Applications may work correctly yet behave very differently across browsers, devices, and screen sizes. Layout shifts, delayed interactions, inconsistent fonts, or timing differences slip through because they do not break functionality. Cross browser testing often stops at basic compatibility checks, where teams validate a flow on a few browsers and assume the experience holds everywhere else.

- Production drift hidden by stable test setups. Differences in browser versions, OS behavior, device hardware, or third-party dependencies introduce issues that never appear in controlled environments. Because stability is prioritized in test setups, variability is treated as noise rather than as a signal. Web quality that holds only in lab conditions fails once it reaches real users.

Where Mobile Experience Quality Commonly Breaks Down

In mobile environments, quality gaps tend to appear in different ways:

- Sensitivity to real-world network conditions. Many mobile app testing efforts validate flows under reliable connectivity, while real users move between Wi‑Fi and cellular networks, encounter latency spikes, and face intermittent connectivity. Network variability is harder to reproduce and often deprioritized, even though small delays during login, content loading, or transactions quickly increase frustration and abandonment.

- Degraded behavior that avoids hard failures. Instead of crashes or error screens, users experience slow responses, missing content, or partial loading that still allows flows to complete. Because testing and monitoring focus on pass or fail states, this degradation persists unnoticed. Repeated minor friction erodes trust faster than a single visible outage.

- Gradual experience regression across releases. Teams confirm that new functionality works after each release but miss incremental drops in performance or responsiveness. Regression testing prioritizes correctness, while experience drift is harder to quantify and rarely tracked. Over time, the product feels slower or less reliable even though nothing appears broken.

Why These Gaps Persist

These gaps persist because several structural factors work together:

- Mobile app testing and cross browser testing involve combinations of devices, OS versions, browsers, networks, and regions that are impossible to cover exhaustively within release timelines.

- As products scale, teams lean on stable test setups to keep automation reliable. This improves efficiency but masks behavior that only appears under real-world conditions.

- Test results and monitoring often emphasize functional success, which allows degraded experience to pass unnoticed as long as flows technically complete.

- Schedules reward predictable execution. Variability is seen as risk to be minimized rather than as a signal to be observed.

- Performance, reliability, and usability signals are split across teams and tools, making it harder to see experience gaps as a single problem.

Conclusion: Quality Is Defined by What Users Experience

Digital quality is not defined by the absence of obvious defects. It is defined by consistency, responsiveness, and reliability across the environments users actually operate in.

When teams look beyond functional correctness and address the hidden gaps in mobile app testing and cross browser testing, quality stops being reactive. It becomes something teams can observe, understand, and improve deliberately.